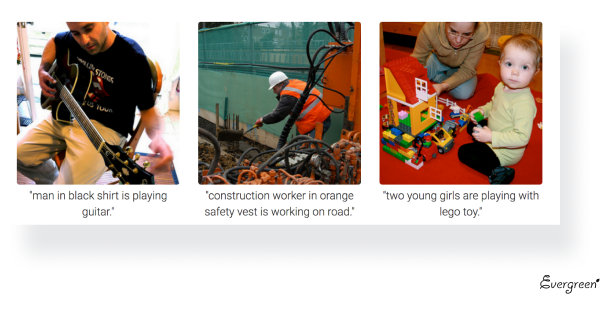

Billions of images are taken every day. It is a challenging task to classify and organize them in a way that allows us to recover a specific group of pictures or a unique image quickly and easily. In our previous article, we already wrote about the role of image annotation for AI development and, particularly, about how this technology helps teach machines to recognize objects in visual media. How can the ability of computers to ‘see’ and ‘understand’ images help us solve more practical business tasks?

Automatic image captioning is widely used by search engines to retrieve and show relevant search results to the user over the annotation keywords, to categorize personal multimedia collections, for automatic product tagging in online catalogs, in computer vision development, and other areas of business and research. We’ve picked some interesting use cases where this technology can be both helpful and profitable.

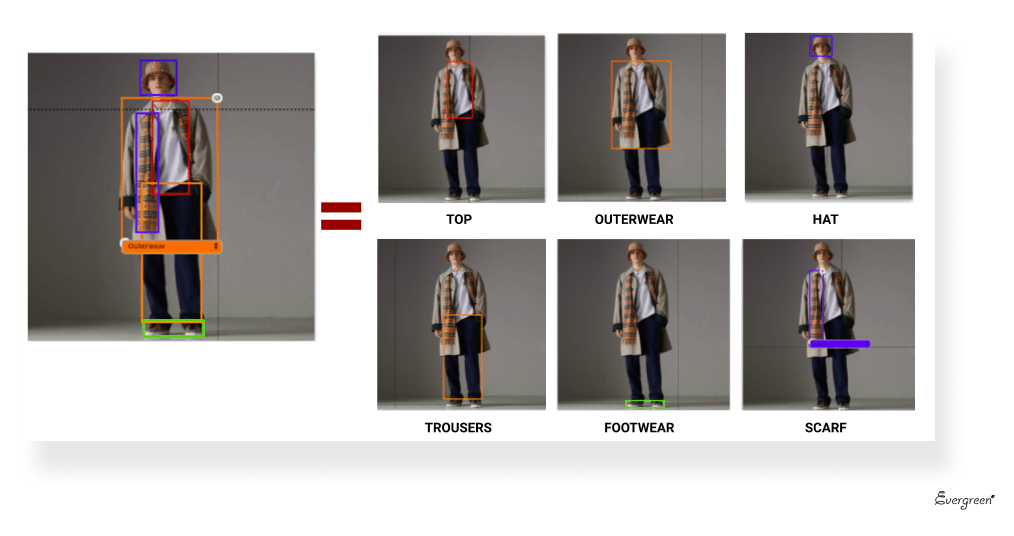

AI-assisted PIM (=Product Information Management) systems can analyze images and automatically generate rich and detailed attributes for online catalogs. Automatic product tagging can save time and costs: image captioning software can analyze product images and automatically suggest relevant attributes and categories. For example, the system can detect the type of fashion item, its material, color, pattern, clothing fit, etc. With AI-powered visual recommendations, customers can navigate through categories more conveniently. Brands like Asos, eBay, Forever21 already use AI-based visual search and image recognition for effective customer engagement.

Assigning relevant keywords to images that reflect their visual content can improve the indexing and retrieval results by the search engines, allowing you to improve your ranking on image keywords. Artificial intelligence and machine learning algorithms can be used to automatically fill out the ALT attributes based on the picture analysis. For example, the Image SEO plugin for WordPress can automatically rename files, create fairly accurate ALT and descriptions attributes, and fill them with SEO friendly content. Google's image analysis tool, Vision API, uses state-of-the-art recognition models to parse each image and return labels for every object within that image it can identify.

We can create a product for the blind and visually impaired people that will help them navigate through everyday situations without the support of anyone else. We can do this by first converting the scene into text and then the text into voice (both are now famous application fields of Deep Learning).

The app called Seeing AI developed by Microsoft allows blind and low-vision people to see the world around them using their smartphones. The application can read text when it appears in front of the camera, provides audio guidance, can recognize both printed and handwritten text, helps recognize friends and family members, describes people near you, can identify currency, and much more.

Aira, a successful California startup, has developed AR-glasses for people with low vision, supported by an AI-powered assistance service called ‘Chloe’. The company uses an array of NVIDIA RTX 2080 Ti GPUs for training its deep neural networks. And Aira uses an extraordinary well-labeled set of data for image and natural language processing.

Security data annotation can be used in various security-related applications:

CCTV cameras are everywhere today, but along with viewing the world, if we can also generate relevant captions, it will allow us to raise alarms as soon as any malicious activity is detected. AI-based algorithms help assign labels to any type of security imaging data that will teach your systems to react to any potentially dangerous situation. This could probably help reduce crime levels and the number of accidents.

We've selected some open-source solutions that can facilitate the process of manual image captioning by generating fairly accurate text descriptions and can be used as a base to develop a custom solution for your particular business needs.

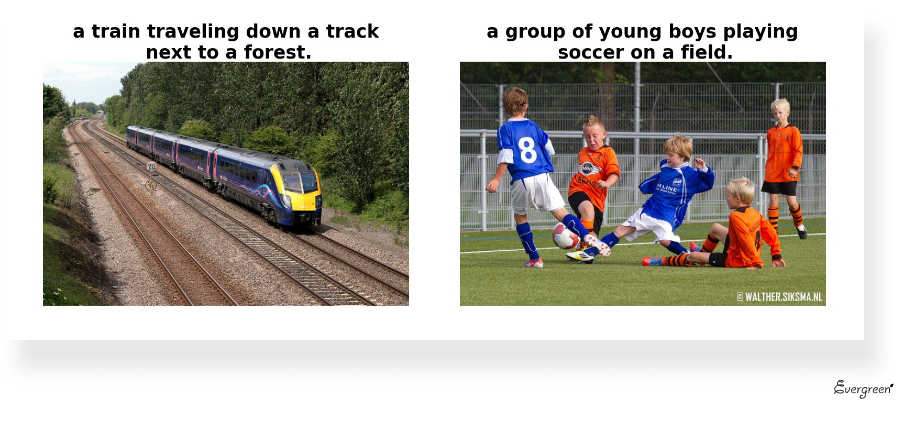

This neural system for image captioning uses an image as input, and the output is a sentence describing the visual content of a picture. This project was built using a convolutional neural network (CNN) to extract the visual features, and uses a recurrent neural network (RNN) to translate this data into text. Both CNN and RNN parts can be further trained using the TensolFlow library.

Caption_generator is a modular library built on top of Keras/ TensorFlow to generate captions in natural language (English) for any input image. It consists of three models: an encoder CNN model, a word embedding model, and a decoder RNN model. The system can generate relatively accurate image captions.

As the name suggests, this solution was developed to recognize different car models using Deep Learning. A Cars Dataset from Stanford is used which contains more than 16K images of 196 classes of cars. Also, you can use a pre-trained model as a demo to create annotations for your own image collection.

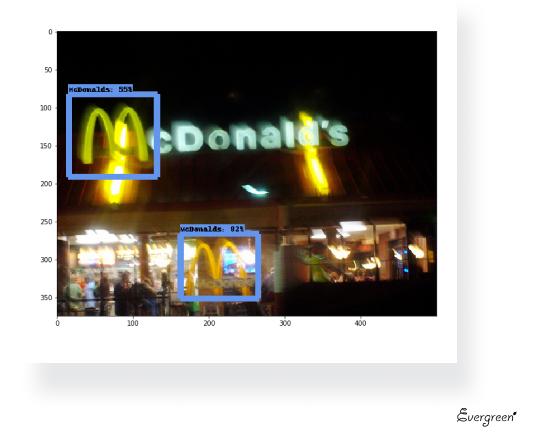

It is a brand logo detection system using TensorFlow Object Detection API. You can create a custom logo detection algorithm using one of the pre-trained models provided with the service package. A text description of the detected brand logo appears on the image but is possible to extract this data in the form of text captions.

Another neural network to generate image captions using CNN and RNN with Beam search. The Beam search algorithm maximizes the probability of finding the most appropriate text caption for a particular image.

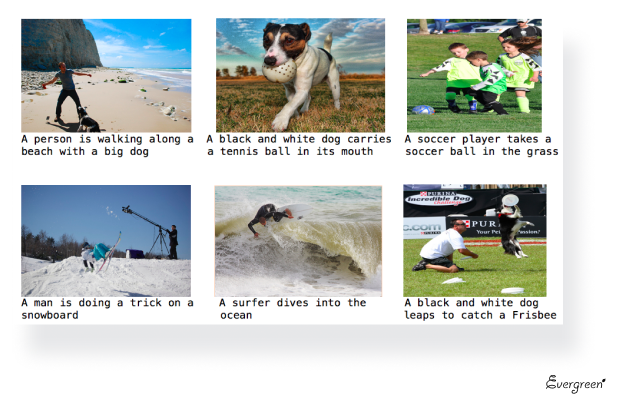

Another platform, CloudCV, offers an interesting visual question answering (VQA) service. Given a question in natural language and an image, a VQA system tries to find the correct answer to it by using deep learning algorithms. These questions require an understanding of language, vision, and common-sense knowledge to answer. The VQA dataset contains more than 265K images (COCO and abstract scenes), more than 614K free-form natural language questions (approx. 3 questions per image), and over 6 million free-form (but concise) answers (10 answers per image).

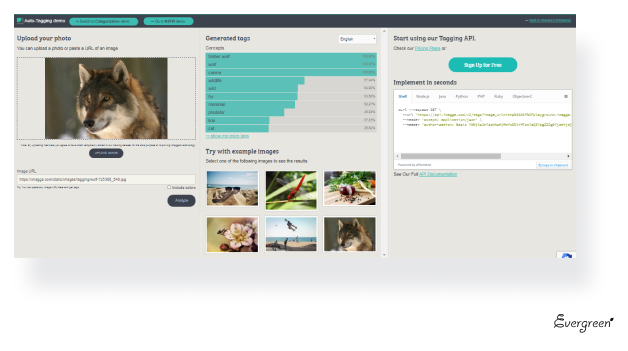

Several compelling solutions already exist on the market, developed to automatically generate image captions for commercial needs (e.g., arranging online catalogs), for fast and convenient multimedia processing, and various projects based on object recognition. Here are some of our picks.

This AI-powered image tagging API allows you to assign relevant keywords and text to images and videos. The program uses deep learning algorithms to learn the pixel content of the pictures, extract visual features, and detect objects of interest. It can accurately recognize objects, scenes, and concepts.

A ‘tool for fashion analysis and discovery’ that allows you to automatically assign high-quality product tags to catalogs. The system suggests more than 300 tags based on images from more than 60 categories (apparel, fashion, jewelry, and more). Other AI solutions include search by image, similar recommendation, and a personalized style advisor.

A built-in AI algorithm of this platform automatically scans and captions images using keywords already stored in the system. These auto-tagged keywords are searchable within Skyfish, so finding an image again is easy. However, auto-tagging exists only inside the platform. Once you export an image outside of Skyfish, all automatic captions will be deleted.

It is a powerful platform designed to automate the image and video analysis with machine learning. Amazon Rekognition Custom Labels allows you to identify business-specific objects and scenes in images, and extract information from them: find your logo in social media, identify your products on the store shelves, classify the machine parts on the assembly line, and much more. The platform also supports face detection and analysis, text detection in images and video, celebrity recognition, person pathing, and other features.

Facebook uses the object recognition technology to automatically create alternative (alt) text to describe a photo for the blind and visually impaired people. If objects are identified, a user can hear a list of items the picture might contain, or the description written by the person who uploaded the photo, the number of likes, comments, etc. Also, this alternative text can be replaced to provide a better description — an option that might be very useful for content managers.

We at Evergreen prefer to use TensorFlow — an open-source machine learning framework — to train the deep learning models to develop our AI products. Our specialists have many years of experience in implementing object recognition and visual search in the clients’ projects. Would you like to learn more about the use of this technology? Don’t hesitate to contact us.

Automatic image captioning (tagging) allows you to organize images with less time and effort: the system does all the routine and uses machine learning to 'read' the visual content and generate text descriptions to explain what is shown on the picture. In this way, images become more accessible to users and search engines — a benefit that can have many practical uses.

We at Evergreen have expertise in using AI technologies and machine learning to develop projects in the field of visual search, face, and object recognition for different businesses. Our team can develop, support, and enhance a personalized solution for a client: use open-source solutions to build an MVP timely and cost-effectively, support the project at every growth stage, and consider future scalability at the very start.

Would you like to develop a custom image-captioning tool for your business or eCommerce project? Are you are interested in creating a complex product that uses object, face recognition, or other elements of computer vision and AI? Please contact us right away. Let’s start working together today and bring the power of innovation to your company!