Visual search is a growing trend in the consumer goods market: point a camera on the item you are interested in or take its picture, and the system will find a product in an online store among millions of options in seconds.

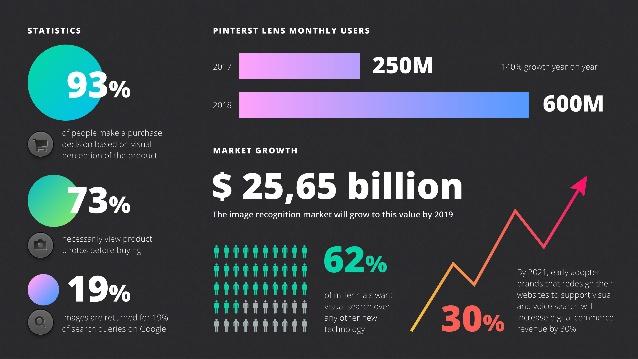

Just take a look at numbers to get an overwhelming realization of the role of visual search in an e-commerce future.

With visual objects recognition systems, you no longer need to define a product's color, design, or other characteristics to find what you need and get its characteristics and price.

The following global retail companies already use visual search:

ASOS, eBay, Neimann Marcus, Ted Baker, Blippar, EasyJet, Levi’s, Disney, Walmart, Salesforce, Syte, Houseology CarStory, Snapchat’s, Farfetch, Marks, Pinterest, Amazon

Possible use cases

Industrial goods. When you need to find some electronics, machinery parts, goods for B2B sector etc., you have to use correct terms, models and serial numbers. Sometimes the term can be forgotten or it takes to much time to type correct good models, dimensions and all the numbers. Visual search solves this problem on a fly and finds needed item per photo in seconds.

Furniture. The concept is very simple: point the camera at any piece of furniture in a house, cafe, store, or even in a printed catalog and immediately get a few links to purchase it. The system identifies a piece of furniture – a sofa, chair, carpet, lamp, finds this object among the options presented in its internal catalog and immediately offers to buy it. GrokStyle already uses it.

Selling of used cars. For those who want to buy a used car, the search was simplified by a visual search on the CarStory resource. You take a picture of any vehicle you like, without even knowing its model, and the system finds the car on the website for sale. Thus, any parking in a city or even a country becomes a virtual car dealership. It takes a person to an online car store in a second.

Real estate companies. With the help of AI visual search, the process becomes much faster and easier for buyers, as well as more convenient to control for brokers. For example, the system classifies all the houses for sale by specific criteria: “Hi-tech houses”, “Duplexes”, “3-bedroom houses”, “Two-story houses”, etc. Based on the user's request, the system offers him houses matching his request for sale. As well as information about them: location, cost, number of bedrooms and bathrooms.

Being on the cutting-edge of product developing, we spent nearly a year creating the best solution servers to achieve speed and accuracy. As a result, we made a product that can find the needed product among thousands of similar ones with precise accuracy.

Our product is a constructor for any goods that provides visual product recognition with the CNN ensemble-based solution that is GPU-based and uses custom neural networks. The buyer must represent the non-work machine and send a photo to the sales director to take advantage of this feature. Let’s take a closer look at the system and how it was made.

Remote control recognition system can find proper product ID among a thousand similar products in a base by a photo, avoiding a human factor or lack of staff experience.

.png)

High recognition results. A neural network can recognize items that look identical. Such accurately and fast recognition could not be demonstrated by the most experienced employee, who would spend a lot of time and funding on training.

Smartphone-friendly. Taken into account, that the buyer will mainly use a smartphone camera to take photos, we also took into consideration the number of factors during neuronal network training:

Neural networks power. The neural network assembly for the remote controller identification function enables you to determine the model accurately, not depending on the language of the buttons and labels.

Quick retraining. The solution allows retraining without the participation of a developer. So you can easy and efficiently retrain the network with new products.

We used neural network training to turn object recognition into reality as a type of machine learning.

Preparing data. With different backgrounds, lights, and positions, we created thousands of images from all over the country. We’ve created a special virtual studio, producing markup images (material) to train neural networks. In various positions and backgrounds we took photos of more than 40 000 images of a particular object. We have also compiled a database of photographs gathered manually.

Phase 1. This phase lasted 3 weeks. The neural network took over 3 million steps during this time

Phase 2 lasted a few more weeks. We have prepared more training material and changed the methodology. The neural network took 3 more million steps. It has finally produced an outstanding result, which is much less sensitive to the quality of incoming images.

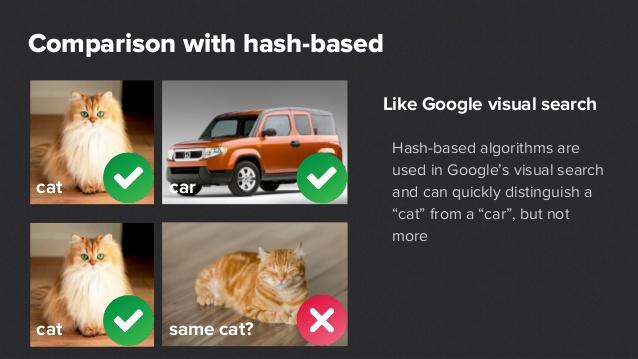

There are a lot of algorithms that allow different levels of recognition

Hash-based. These algorithms are used in Google’s visual search and can quickly distinguish a “cat” from a “car”, but that’s how its capabilities are limited.

SIFT/SURF. These algorithms allow detecting points of interest on an image but are too sensitive to light, damage of an original object, etc.

Custom CNN (these are used in Google Lens, Google AutoML Vision, and other ready-made box-solutions). These algorithms help you to differentiate perfectly between one class and another but make mistakes if items are visually similar.

TensorFlow – the machine learning framework. We used it to construct a neural network that is optimal for further training with our material.

Google Cloud Vision API is used to recognize labels and to find the best match among the possible results, we used, and Soundex.

Soundex is an algorithm for comparing two strings by their sound, setting the same index for strings that have a similar sound in English.

We also used a full-text search and Levenshtein distance calculation.

Already know how your business can benefit from an object or human face recognition system? Or, maybe you have bold ideas for your business? Please, feel free to contact us.

Also you can check our full presentation about Remote control Visual Search.